Using PaaS services comes with many benefits: less infrastructure management, simplified scaling and integrated automation to name a few. Consider spinning up a kubernetes cluster yourself vs spinning up an instance of AKS. I’ve done both and I know which experience I’d prefer every day. To misquote a great movie: “With great power, comes less responsibility.”

One of those responsibilities that is being taken care of for you is networking. If the actual network implementation is of little importance to you, PaaS is a great way to deploy services without setting up a complex virtual networking infrastructure. If, on the other hand, you want to ingrate those PaaS services with existing infrastructure you might want to take control of the network traffic. Or, if you need a tighter security control, you also might want to control the network security aspect of your PaaS services.

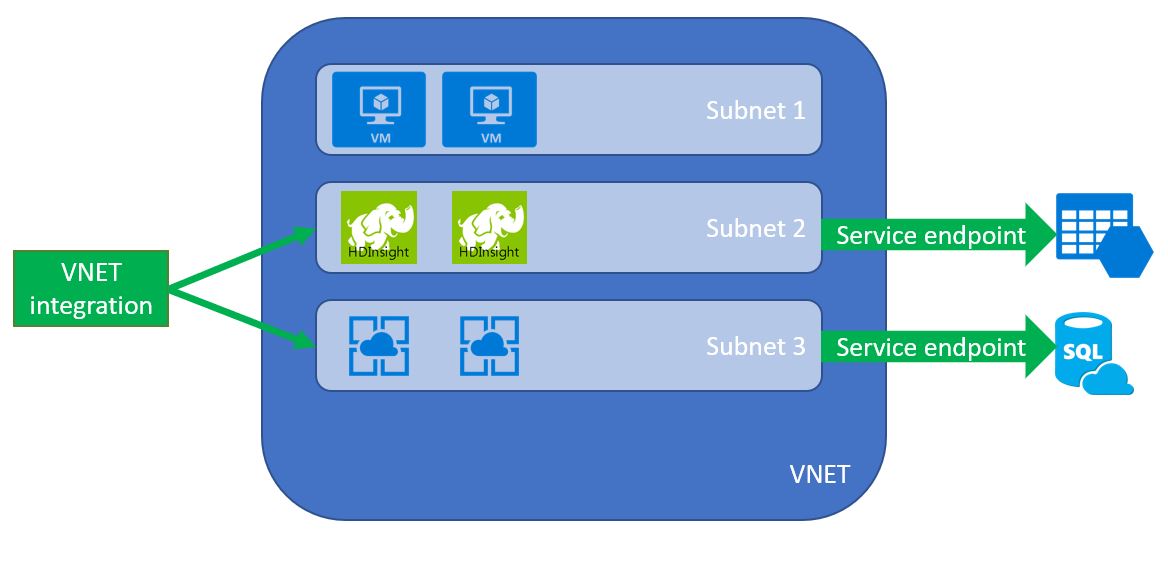

With Azure PaaS services there are two ways you can control the networking of those services: either through VNET integration (also called injection) or through Service Endpoints. VNET integration will deploy PaaS services into your VNET’s address space, whereas Service Endpoints create a tunnel between your VNET and the PaaS service through the Azure back-end. I’ll dive into those two during this blog post, and will follow-up with another post with a practical walkthrough on how to set it up for a couple of different services. The actual service will dictate how you can integrate the PaaS service with your VNET.

On a closing note in this intro, there is a small special case on PaaS integration: Hybrid connections and VNET integration (P2S) using App Service. Both allow App Service to communicate with services in your VNET. Hybrid connections do this by using a service bus hybrid connection and pointing DNS to the service bus; whereas VNET integration (P2S) uses a point-to-site VPN to communicate between your app service and your VNET. I won’t dive into these integrations (unless by popular demand).

VNET integration (aka injection)

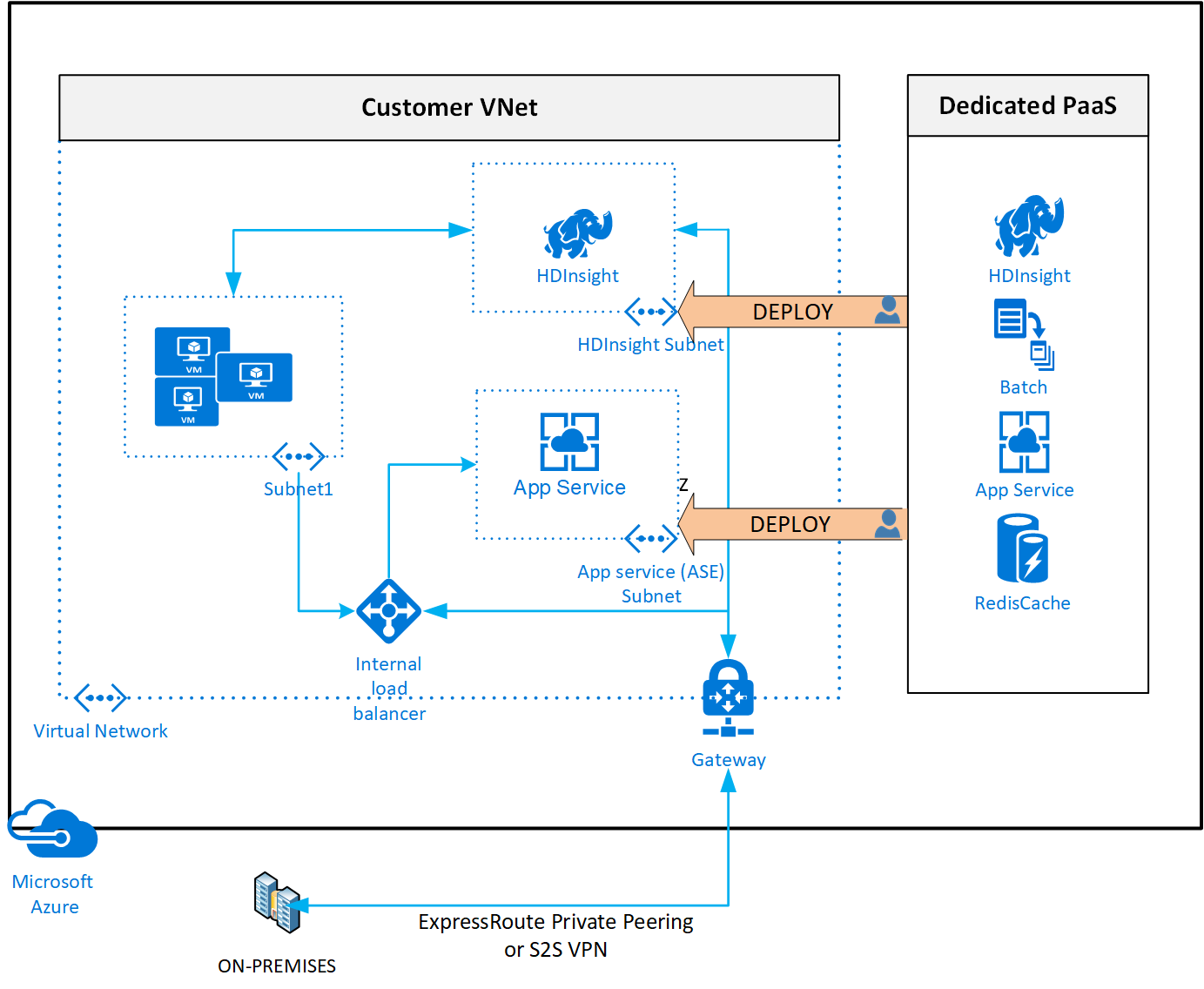

VNET integration deploys your PaaS service into a subnet in your VNET. This means your service will be addressable using private IP(s). In most cases however, VNET integration requires a dedicated subnet. This means you should reserve enough IP address space to allow for those services to exist in their own subnet.

Although VNET integration means a service will be deployed using private IPs, your service can still have a public IP assigned to it. In some cases this is mandated and required, such as with a VPN gateway: what’s the point of a VPN if it doesn’t have a public IP. In other cases, it is mandated but might not be fully required for the end-user. One such example is HDinsight, which still uses a public IP to allow you to do cluster management on https://CLUSTERNAME.azurehdinsight.net. You could however also manage your cluster through its private management endpoint https://CLUSTERNAME-int.azurehdinsight.net so the public IP is not ‘fully required’ in my point-of-view. In some cases, a public IP is not mandated and might not be required. One such example is AKS (Azure Kubernetes Service) which deploys into a private subnet; communicates with the Kubernetes master nodes through the kubelet. You only add a public IP to the services you expose on the Kubernetes cluster.

One advantage of using VNET integration is that you can implement NSGs and UDRs on your subnets. This allows you to control inbound and outbound traffic using NSGs and control traffic routing via UDRs. Every service has its own particularities about allowing and/or supporting NSGs and UDRs, so be very mindful when you implement them.

With all your PaaS services integrated and secured through NSGs, you will need to give Azure some access to your services to be able to manage the PaaS services. Each service clearly documents the requirements: which ports should be opened for which IP address blocks. For instance, an App Service Environment requires you to open ports 454 and 455 for management from the ASE management addresses. One requirement all services have is to allow inbound traffic from the Azure Load Balancer health probe (168.63.129.16).

When you deploy a service into your VNET, it is automatically accessible for connected on-prem resources (either through VPN or Expressroute) and also for peered VNETs. This connectivity is bi-directional: meaning you can access resources on-prem (for instance an ASE can connect to an internal API through a VPN) and on-prem resources can access your VNET integrated PaaS service (for instance your end-user submitting a vacation request to your containerized vacation request tool hosted on AKS).

Multiple services support VNET integration (an updated list can be found here)

- Azure Batch

- Application Gateway and WAF

- VPN gateway

- Azure Firewall

- RedisCache

- Azure SQL DB Managed Instance

- Hdinsight

- Azure Databricks

- Azure AD Domain Services

- Azure Kubernets Service

- Azure Container Instance

- API management

- App Service Environment

Service Endpoints

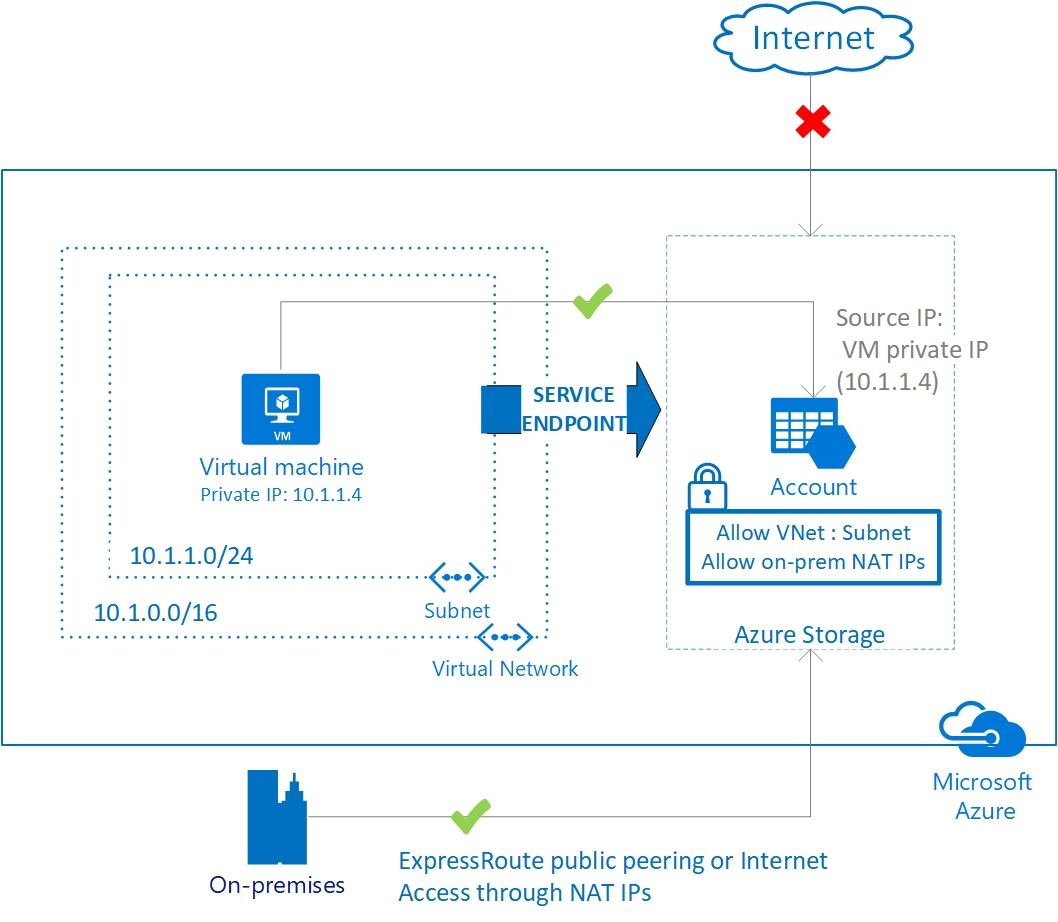

Service endpoints tunnel PaaS traffic through the Azure backbone. Your VMs or injected services use the same DNS name and public IP address to access the tunneled service, but Azure will reroute the traffic through a tunnel in the backbone. The platform will effectively create a route in the subnet route table for subnets enabled for service endpoints. This route for service endpoints takes precedence over BGP or UDR routes.

VNET service endpoints are linked to one of more subnets in your VNET, but not necessarily to all subnets of your VNET. This means you can effectively create a 3-tier architecture with web/app/DB shielded from each other (imagining you enable a service endpoint for Azure SQL). Additionally, the service endpoints are not transitive, meaning peered or connected networks cannot connect to the service using that service endpoint. You can connect one service to multiple subnets in different VNETs however. You do need to respect regional boundaries for service endpoints. For on-prem resources, VNET service endpoints don’t work natively – but could be made to work by hosting a proxy/router in a subnet that is service endpoint enabled and proxying/routing the traffic through that instance.

When you enable service endpoints on a PaaS service, this enables you to completely shield the service from the internet using the service’s firewall. The service will maintain its public IP, but will block all incoming requests.

When connecting to a service through a service endpoint, there are multiple security boundaries you have to cross:

- First of all, your subnet needs to connected through the service endpoint. (L3 security – routing)

- Next, your NSG rules need to allow traffic to the PaaS service (outbound) (L4 security – ACL)

- Finally, you need application level access to your service: for instance an account in Azure SQL or a SAS-token for storage. (L7 security – application)

It’s important to know that with service endpoints traffic can only be originated from services inside your virtual network and not from the connected service.

Multiple service support connections through service endpoints: (for an up to date list check here)

- Azure Storage

- Azure SQL Database

- Azure Database for PostgreSQL server

- Azure Database for MySQL server

- Azure Cosmos DB

- Azure Key Vault

- Azure SQL DW (preview)

- Azure Service Bus (preview)

- Azure Event Hubs (preview)

- Azure Data Lake Store gen 1 (preview)

Conclusion

Azure offers two ways to connect PaaS service to your VNETs. With VNET integration, your service is deployed using a private IP in your existing networks. With service endpoints, a tunnel is created in the backend between your network and the PaaS service.

In a following post, we’ll dive into how to set it up and we’ll test out some traffic. If you cannot wait, head on over to an earlier post on service endpoints for storage.