Last week, I wrote a post about how to create a Kubernetes cluster on Azure using kubeadm. It wasn’t as hard as Kubernetes the hard way, but it took some time to get everything setup and get all the infrastructure spun up.

The goal of this post is to show an easier path to get a non-AKS cluster running on Azure using the cluster API project. The goal of the cluster API project is to provide a declarative API and tooling to create and manage Kubernetes clusters on different providers.

I’ll be using the Cluster API Provider Azure for the purpose of this post. This is an implementation of Cluster API for Azure, that spins up infrastructure on Azure to create that cluster.

Let’s start!

Chicken and the egg: Using Kubernetes to create more Kubernetes

The Cluster API project leverages the Kubernetes API to create new Kubernetes clusters. This essentially means that you need an existing Kubernetes cluster to run the Cluster API.

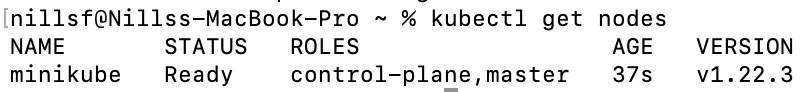

To that purpose, I spun up a minikube cluster on my local laptop. This is a M1 MacBook pro, and I was very positively surprised that spinning up a minikube cluster worked from the get-go without any issues.

brew install minikube

minikube start

kubectl get nodesThis gave me a local Kubernetes cluster to work with.

You can use any Kubernetes cluster to run the cluster API project. In production use cases, I wouldn’t use minikube for creating your clusters; but would rely on a production Kubernetes cluster (for example an AKS cluster).

Installing cluster API tooling

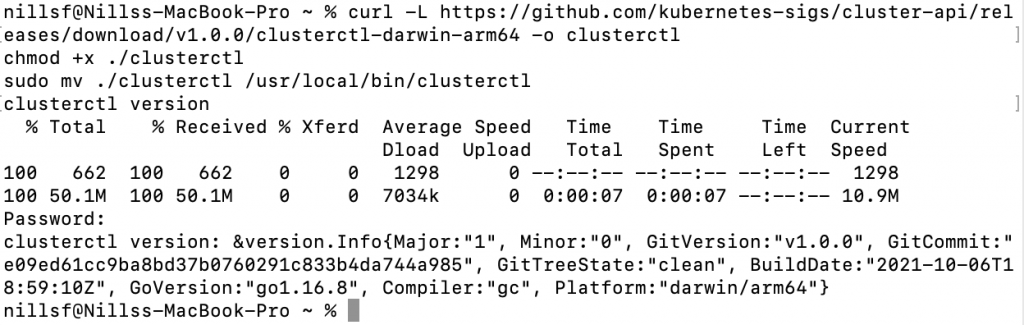

Next up, you need the cluster API command line tool. Make sure to check out the cluster API documentation so you can download the right tool for your environment (which in my case is M1 Mac):

curl -L https://github.com/kubernetes-sigs/cluster-api/releases/download/v1.0.0/clusterctl-darwin-arm64 -o clusterctl

chmod +x ./clusterctl

sudo mv ./clusterctl /usr/local/bin/clusterctl

clusterctl versionWhich also worked great (this is getting suspicious, isnt it?):

And with that done, we can now go ahead and bootstrap cluster API for Azure on minikube:

Setting up cluster API for Azure

To setup cluster API for Azure, you need a service principal. The secret of this service principal will be stored in a Kubernetes secret. To do this, you can use the following commands:

export AZURE_SUBSCRIPTION_ID=$(az account show | jq .id | tr -d '"')

# Create an Azure Service Principal

export SERVICE_PRINCIPAL=$(az ad sp create-for-rbac --name nfclusterapiblog --role Contributor -o json)

export AZURE_TENANT_ID=$(echo $SERVICE_PRINCIPAL | jq .tenant | tr -d '"')

export AZURE_CLIENT_ID=$(echo $SERVICE_PRINCIPAL | jq .appId | tr -d '"')

export AZURE_CLIENT_SECRET=$(echo $SERVICE_PRINCIPAL | jq .password | tr -d '"')

# Base64 encode the variables

export AZURE_SUBSCRIPTION_ID_B64="$(echo -n "$AZURE_SUBSCRIPTION_ID" | base64 | tr -d '\n')"

export AZURE_TENANT_ID_B64="$(echo -n "$AZURE_TENANT_ID" | base64 | tr -d '\n')"

export AZURE_CLIENT_ID_B64="$(echo -n "$AZURE_CLIENT_ID" | base64 | tr -d '\n')"

export AZURE_CLIENT_SECRET_B64="$(echo -n "$AZURE_CLIENT_SECRET" | base64 | tr -d '\n')"

# Settings needed for AzureClusterIdentity used by the AzureCluster

export AZURE_CLUSTER_IDENTITY_SECRET_NAME="cluster-identity-secret"

export CLUSTER_IDENTITY_NAME="cluster-identity"

export AZURE_CLUSTER_IDENTITY_SECRET_NAMESPACE="default"

# Create a secret to include the password of the Service Principal identity created in Azure

# This secret will be referenced by the AzureClusterIdentity used by the AzureCluster

kubectl create secret generic "${AZURE_CLUSTER_IDENTITY_SECRET_NAME}" --from-literal=clientSecret="${AZURE_CLIENT_SECRET}"

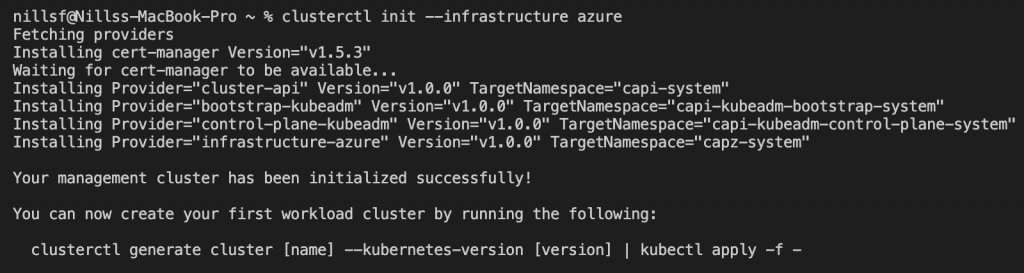

And then finally, you can setup cluster API (for Azure) on the cluster:

clusterctl init --infrastructure azureWhich will setup cluster API on the minikube cluster:

Creating a Kubernetes cluster using Cluster API

We are now ready to create a new cluster. First, we’ll setup some Azure environment variables:

export AZURE_LOCATION="westus2"

export AZURE_RESOURCE_GROUP="capz-trial"

# Select VM types.

export AZURE_CONTROL_PLANE_MACHINE_TYPE="Standard_D2s_v4"

export AZURE_NODE_MACHINE_TYPE="Standard_D2s_v4"And then we can create the cluster definition:

clusterctl generate cluster capz-trial \

--kubernetes-version v1.21.0 \

--control-plane-machine-count=3 \

--worker-machine-count=3 \

> capz-trial.yamlThis creates a little over 200 lines of YAML, which you can find here.

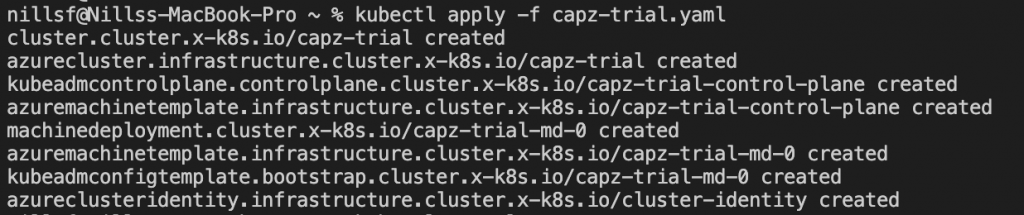

We can now create this cluster using:

kubectl apply -f capz-trial.yamlWhich will create the necessary objects in our minikube cluster, to create an Azure cluster:

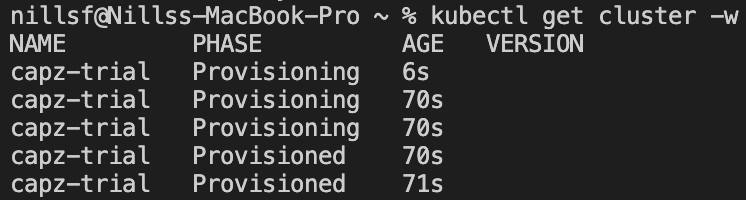

I then monitored the cluster using kubectl, and apparently after 70 seconds it was provisioned. I had stepped away, assuming it would take a couple minutes so can’t confirm this cluster was operational after 70 seconds. If so, that would be FAST.

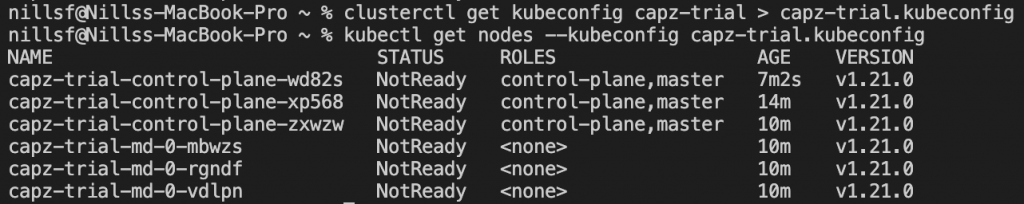

We can get the kubeconfig to interact with this cluster using:

clusterctl get kubeconfig capz-trial > capz-trial.kubeconfigAnd then do for instance a get nodes:

The nodes aren’t ready yet, because there’s no CNI setup yet. We can setup a CNI now, and the CAPI project defaults to Calico, which we’ll setup (if you’re following the CAPI docs, please ensure to install the Azure calico CNI, because the default doesn’t work):

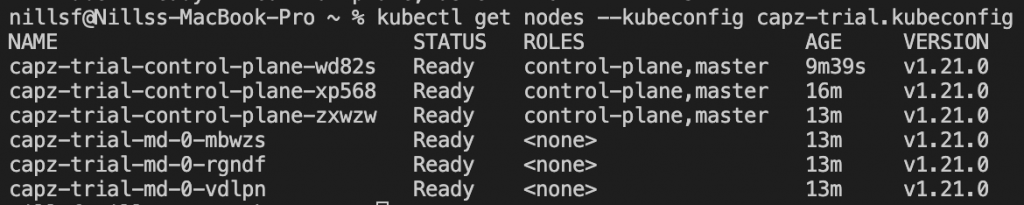

kubectl --kubeconfig=./capz-trial.kubeconfig \

apply -f https://raw.githubusercontent.com/kubernetes-sigs/cluster-api-provider-azure/main/templates/addons/calico.yamlAfter that is applied, the nodes should be ready:

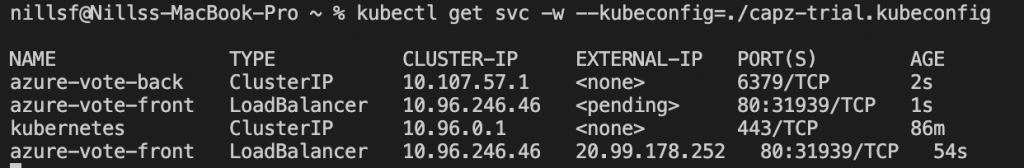

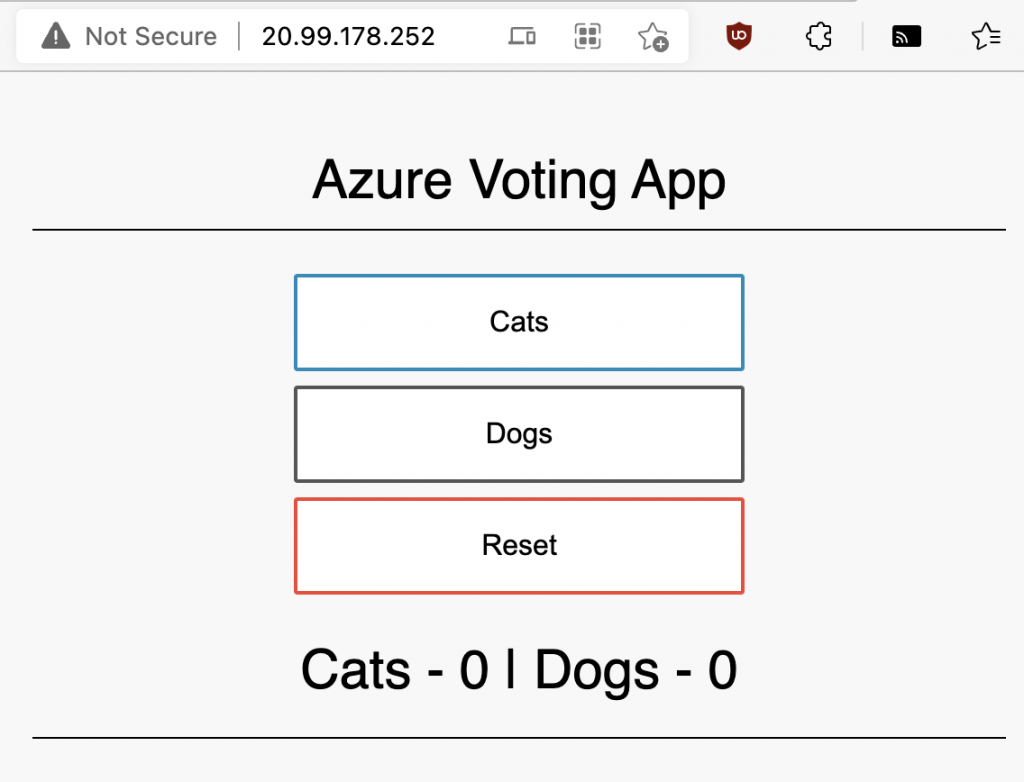

And we can now create a sample application, to verify everything is working. I like to use Azure-vote for this purpose. Given CAPZ sets up the Azure load balancer integration, we can use the public version of azure-vote, including an Azure load balancer with public IP:

kubectl apply -f https://raw.githubusercontent.com/Azure-Samples/azure-voting-app-redis/master/azure-vote-all-in-one-redis.yaml --kubeconfig=./capz-trial.kubeconfig This will create the workload and a service. To get the service details, you can use:

kubectl get svc -w --kubeconfig=./capz-trial.kubeconfig Which will return you the service’s public IP:

And by browsing to that public IP, you should see the Azure vote application:

And that’s how you create a CAPZ cluster!

Let’s also clean up after ourselves. We can do this with:

kubectl delete cluster capz-trialAnd we can also delete the minikube cluster:

minikube deleteSummary

In this post we explored creating a non-AKS Kubernetes cluster on Azure using the cluster API project. In my opinion, it’s a lot easier creating a cluster with cluster API than it is with kubeadm. Less infrastructure to setup, and less interactions with individual machines. Also, cluster API is available for a multitude of infrastructure providers, meaning you can get consistent tooling across cloud providers.

The downside of using cluster API is that you need an existing Kubernetes API to deploy the infrastructure with. Minikube and kind can solve this for my demo use cases, but I wouldn’t use that in production.

One very interesting pattern that cluster API unlocks in integrating your Kubernetes cluster definition (i.e. Infrastructure-as-code) with GitOps. This is something I’m exploring at the moment, and will very likely write about soon. Stay tuned!