At GitHub Universe in early December 2020, some new functionalities for GitHub Actions focused on continuous delivery were introduced. In this blog post, we’ll explore them in the context of a simple web-app deployment to a Kubernetes cluster.

Before diving into what’s new, let’s have a look at what GitHub Actions are:

What are GitHub Actions?

GitHub Actions are an automation workflow integrated with GitHub repositories. When they were initially launched in 2018, Actions were mainly targeted towards workflow automation in/around your GitHub repo. Since then, Actions has evolved and has grown into a continuous integration and continuous delivery platform. With the new announcements, using actions for continuous delivery has become even better.

Actions are integrated into your GitHub repository. A repository can have multiple Actions associated with it. Each Action is defined as a YAML file that is integrated with your GitHub repo. Each Action can be triggered by one or multiple events on your repo, such as a push to the main branch, a new pull request, a new issue, and a lot of other events.

Within an Action, you define a number of jobs. Each job has a number of steps, where each step is a command or set of commands that can be run. By default, jobs run in paralel, and A step can be as easy as running something on the command line, or it can be executing one of over 6000 Actions from the GitHub marketplace.

A GitHub Actions example

An example GitHub Actions that I used for an earlier blog post is contained here. Let’s cover the important steps in that action:

name: build_and_push

on:

push:

branches: [ main ]

pull_request:

branches: [ main ]First, we cover the basics. We give the Action a name (in this case build_and_push), and we define when it is run. In this case, we run it on pushes and pull_requests against the main branch.

jobs:

build:

runs-on: ubuntu-latestNext, we have the definition of the jobs. In this Action, I am only using a single job, but it could have been split up into different jobs. In this case, we’re defining that we’re running the Action on Ubuntu-latest. You could also run it on Windows or MacOS as GitHub hosted runners, or you could run the Actions on a self-hosted runner.

steps:

- uses: actions/checkout@v2

- name: az login

run: az login --service-principal --username ${{ secrets.APP_ID }} --password ${{ secrets.PASSWORD }} --tenant ${{ secrets.TENANT_ID }}

- name: Build Docker Container

run: docker build . -t nfvnas.azurecr.io/python-admission/python-admission:${{ github.run_number }}

- name: ACR login

run: az acr login -n nfvnas

- name: Push container

run: docker push nfvnas.azurecr.io/python-admission/python-admission:${{ github.run_number }}

- name: Update deployment

run: sed -i 's|nfvnas.azurecr.io/python-admission/python-admission:.*|nfvnas.azurecr.io/python-admission/python-admission:${{ github.run_number }}|gi' deploy.yaml

- name: Get AKS credentials

run: az aks get-credentials -n win-aks -g win-aks

- name: Update admission controller

run: kubectl delete -f admission.yaml

continue-on-error: true

...Finally, we get to the meat and potatoes of the Action: the actual steps. As you can see, most of the steps in my Action are commands I’m running on the Shell in the GitHub Action. I’m doing a number of steps involved in building the new image and then deploying it to Kubernetes. You can find the full definition of the Action on my GitHub repo.

Two things worth pointing out in the steps in the action:

- Secrets: To log into the Azure Cloud Shell, I’m using secrets contained with GitHub actions.

- Variables: I’m accessing the GitHub actions runner count variable. I’m using that to increment the container image version. Another option would have been to link that the SHA hash of the git commit.

What’s new for continuous delivery in GitHub Actions

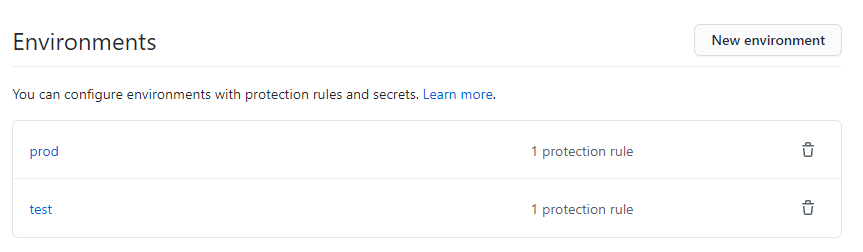

The reason I’m writing this blog post is to cover what’s new for GitHub Actions and continuous delivery. GitHub Actions has introduced the capability of environments, which help with continuous delivery on two fronts:

- Environment protection rules: These rules allow you to pause the roll-out of your GitHub Action. You can continue the Action after a set amount of people have reviewed the deployment and/or a specified wait timer.

- Environment secrets: With environments, GitHub actions also introduced environment-specific scoped secrets. As a deployment is running through dev-stage-production, you could have different secret values for each environment, while using the same name for the secret itself.

Let’s have a look at what this means in a practical demo:

Demo of continuous delivery in GitHub Actions on a Kubernetes cluster

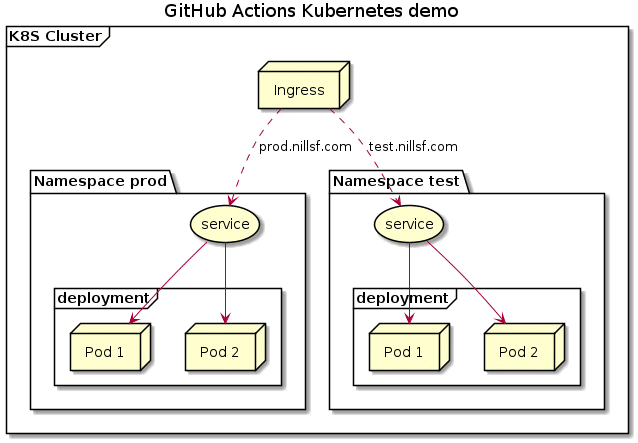

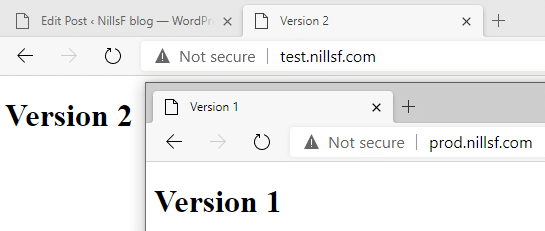

For our continuous delivery demo, we will build a very light website showing the version of our software. We’ll deploy this on a Kubernetes cluster, where a single ingress will distribute the traffic between test and prod. All of this is hosted on GitHub. This is what the architecture looks like (don’t worry, it’s less complicated than it looks):

To focus on the purpose of this blog post, I went ahead and did the following things already:

- Created Azure Container Registry and AKS cluster.

- Created GitHub actions to build container and push the container to ACR.

So far, our GitHub action looks like this:

name: Build-push-deploy

on:

push:

branches: [ main ]

workflow_dispatch:

jobs:

build-push:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: az login

run: az login --service-principal --username ${{ secrets.APP_ID }} --password ${{ secrets.PASSWORD }} --tenant ${{ secrets.TENANT_ID }}

- name: Build Docker Container

run: docker build . -t nfacr.azurecr.io/webdemo/webdemo:${{ github.run_number }}

- name: ACR login

run: az acr login -n nfacr

- name: Push container

run: docker push nfacr.azurecr.io/webdemo/webdemo:${{ github.run_number }}Let’s pick things up here by creating the deployment step for the test namespace. First, we’ll create an environment so I can enforce manual approval:

In the GIF above you’ll see the steps we took to create the environment. Let’s now also create a job in our GitHub action to use this environment and its manual approval step.

deploy-test:

needs: build-push

runs-on: ubuntu-latest

environment: test

steps:

- uses: actions/checkout@v2

- name: az login

run: az login --service-principal --username ${{ secrets.APP_ID }} --password ${{ secrets.PASSWORD }} --tenant ${{ secrets.TENANT_ID }}

- name: get AKS credentials

run: az aks get-credentials -n nfaks -g gh-actions

- name: deploy (will deploy base infra if not exists)

run: kubectl create -f test.yaml

continue-on-error: true

- name: update deployment image

run: kubectl set image deployment/test-website -n test website=nfacr.azurecr.io/webdemo/webdemo:${{ github.run_number }}I committed this to the GitHub repo on the main branch to trigger the GitHub Action. To show the update, I also updated the index.html file to reflect version 2 is live.

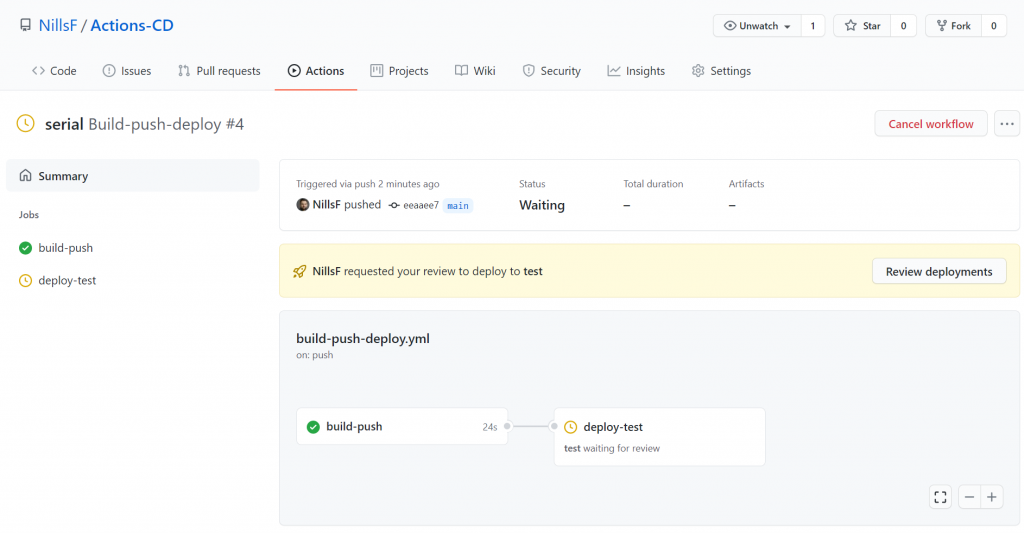

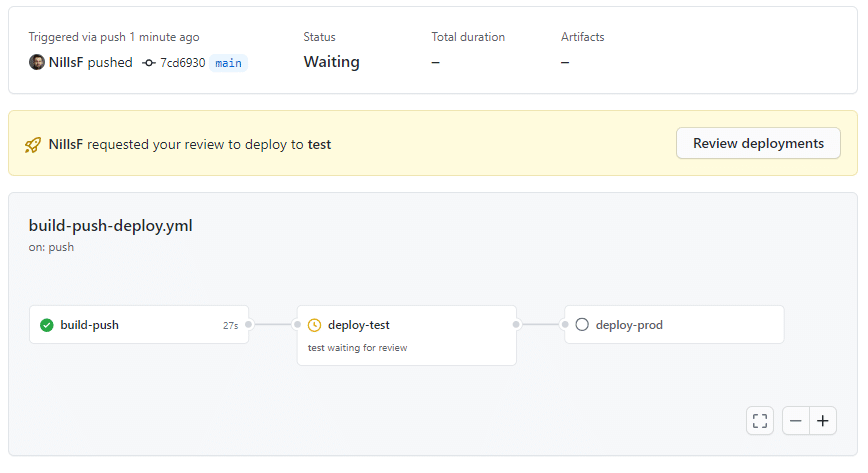

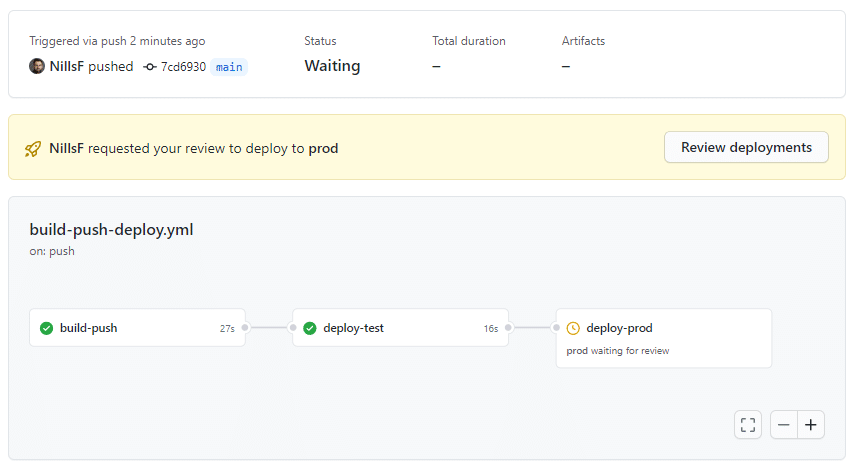

Committing this to the main branch has indeed triggered the action, and after a couple seconds for the build step the finish, it also triggered the manual approval:

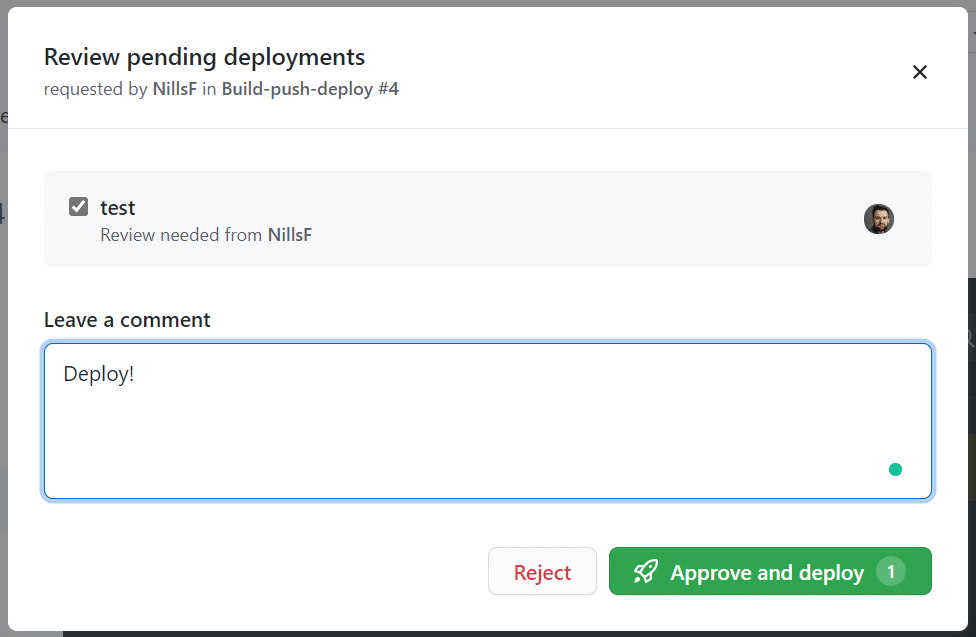

If we hit the review deployments button, we can approve and deploy.

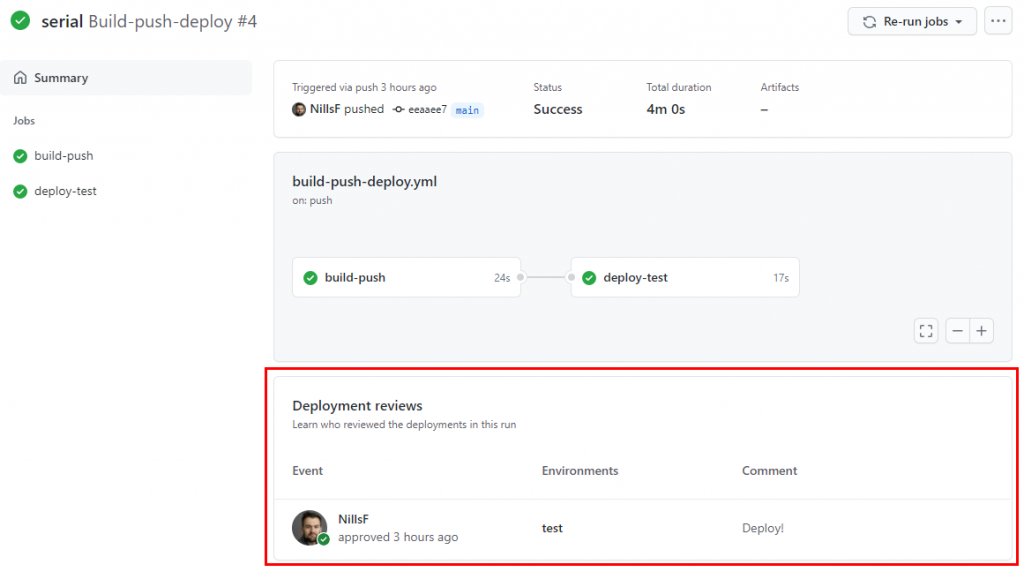

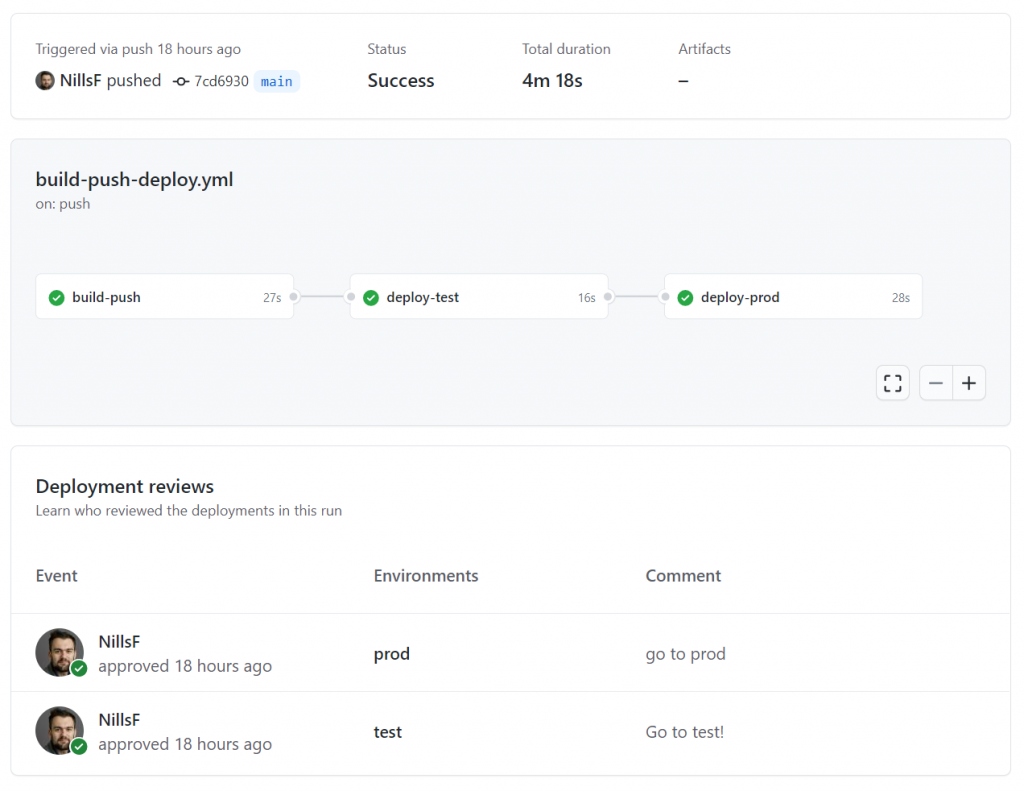

And if you check in when the deployment is complete, you can track who approved to release to happen:

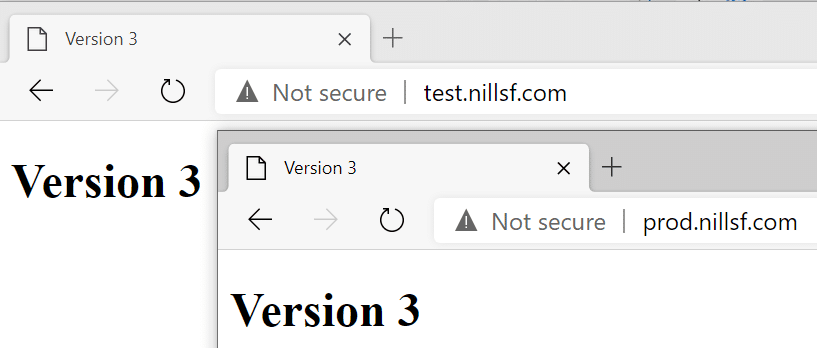

And the release did actually update the deployment on the Kubernetes cluster:

We can do the same thing for prod. The action for prod looks very similar to the one from test – with the only difference being we’re deploying to prod rather than to test.

deploy-prod:

needs: deploy-test

runs-on: ubuntu-latest

environment: prod

steps:

- uses: actions/checkout@v2

- name: az login

run: az login --service-principal --username ${{ secrets.APP_ID }} --password ${{ secrets.PASSWORD }} --tenant ${{ secrets.TENANT_ID }}

- name: get AKS credentials

run: az aks get-credentials -n nfaks -g gh-actions

- name: deploy (will deploy base infra if not exists)

run: kubectl create -f prod.yaml

continue-on-error: true

- name: update deployment image

run: kubectl set image deployment/prod-website -n prod website=nfacr.azurecr.io/webdemo/webdemo:${{ github.run_number }}Before committing this to git, let’s also create the second environment:

After creating the prod environment, we can then go ahead and commit our updated action with a HTML update so we can verify the deployment. The first thing that happens, is that we’re asked to confirm the test deployment:

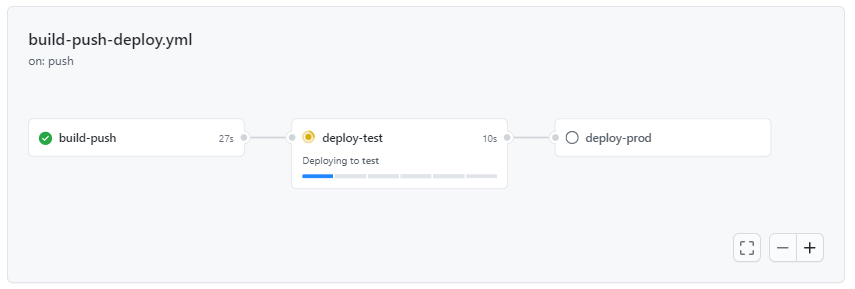

We can approve this similarly to how we did that previously. What I didn’t realize previously is how there’s a neat little progress bar to track deployment status.

Then we wait for the test deployment to finish, and we are asked to review the production deployment:

And after approving the deployment to prod, we can afterwards see the full deployment review and see who approved each release.

And if we check both web pages, both are running on v3 now.

And that’s how you can use the deployment review feature in GitHub Actions.

Summary

In this post we reviewed the recent updates in GitHub actions related to continuous delivery. The new environments in GitHub actions allow you to request manual approval or do time based delays. We explored the manual approval step in this post.

Another interesting new feature is the ability to have environment-scoped secrets. We didn’t explore that in this blog post, but with environment-scoped secrets, you could use the same secret name but have different values per environment.